/ 5Gセキュリティとは

5Gセキュリティとは

5Gセキュリティは、第5世代(5G)ワイヤレス ネットワークに焦点を当てたワイヤレス ネットワーク セキュリティ分野です。5Gセキュリティ技術は、5Gインフラストラクチャーおよび5G対応デバイスをデータ損失、サイバー攻撃、ハッカー、マルウェア、その他の脅威から保護するのに役立ちます。過去の世代と比較して、5Gは仮想化、ネットワーク スライシング、ソフトウェア定義ネットワーク(SDN)をより多用しているため、新しい種類の攻撃に対して脆弱となります。

5Gセキュリティが重要な理由

5Gテクノロジーを社会実装する国が拡大するにつれ、重要なインフラや世界中のさまざまな業界に非常に大きな影響が及ぶことが見込まれます。しかし、5Gの技術的進歩は、新たなサイバーセキュリティ リスクの発生や、サイバーセキュリティ リスクの拡大につながっており、通信事業者やその利用者にとって無視できないものになっています。

- 攻撃対象領域の拡大:接続されたデバイスの増加やインフラストラクチャーのクラウド移行の拡大は、サイバー犯罪者にとって、エクスプロイトの糸口となるエントリー ポイントの拡大を意味します。また、5Gでは1平方キロメートルあたり数百万台のデバイスの相互接続が可能なため(4G LTEおよびWi-Fi対応デバイスでは数万台)、1台のデバイスで発生したエクスプロイトによって次々に脆弱性が拡大し、エコシステム全体のセキュリティが損なわれる可能性があります。

- ネットワーク スライシングによる脆弱性:5Gのインフラストラクチャーでは、ネットワーク スライシングが可能です。これによって、5Gネットワーク内で隣り合うような形で複数の仮想ネットワーク セグメントを作成し、各セグメントを特定のアプリケーション、企業、業界に割り当てることができます。この手法は効率的なものの、スライス内攻撃などの新たなリスクを招いてしまいます。攻撃者によるラテラル ムーブメントを防ぐには、スライスの安全な分離およびセグメント化が必要です。

- サプライ チェーン リスク:5Gはハードウェア、ソフトウェア、サービスのグローバル サプライ チェーンに依存しています。すべてのコンポーネントのセキュリティを確保することは困難である一方、脅威アクターはハードウェア/ソフトウェアのサプライ チェーンにおける1か所または複数のポイントを侵害の標的として、5Gのネットワークやデバイスへの侵入を試みることがあります。

- データ プライバシー上の懸念:5Gネットワークは大量のデータのやり取りや処理に活用されているため、増加し続ける個人データや機密データを取り巻くプライバシー上の懸念が生まれています。データへの不正アクセスは、多くの場合、アイデンティティーの窃取、詐欺、その他の形での悪用につながります。

- 重要インフラのリスク増大:5Gテクノロジーは、送電網、交通システム、医療施設など、国家の重要インフラと一体化しています。したがって、ひとたび侵害を受ければ、社会の安全、患者の健康状態、産業活動、国家の安全保障、さらには経済全体の安定に深刻な影響が及ぶ可能性があります。

5Gは、さまざまな業界や政府機関などにとって次なる技術革新の原動力となる一方で、より大きな(場合によっては定量化できない)セキュリティ リスクを伴うのです。

5Gセキュリティのメリット

サービス プロバイダーやモバイル通信事業者には、自社の5Gネットワークにおける顧客のデータや運用のプライバシー、セキュリティ、完全性を担保する責任があります。効果的なセキュリティ対策の実施は、プロバイダーや通信事業者に以下のようなメリットをもたらします。

- リアルタイムのセキュリティ モニタリングおよび分析によって、セキュリティ上の潜在的な脅威をより迅速に検出して対応できる

- サイバー攻撃の影響を最小限に抑え、攻撃に伴う損失や信用上のダメージを軽減できる

- 顧客のデータやオペレーションの完全性に対するコミットメントを示し、ロイヤルティーや信頼を得られる

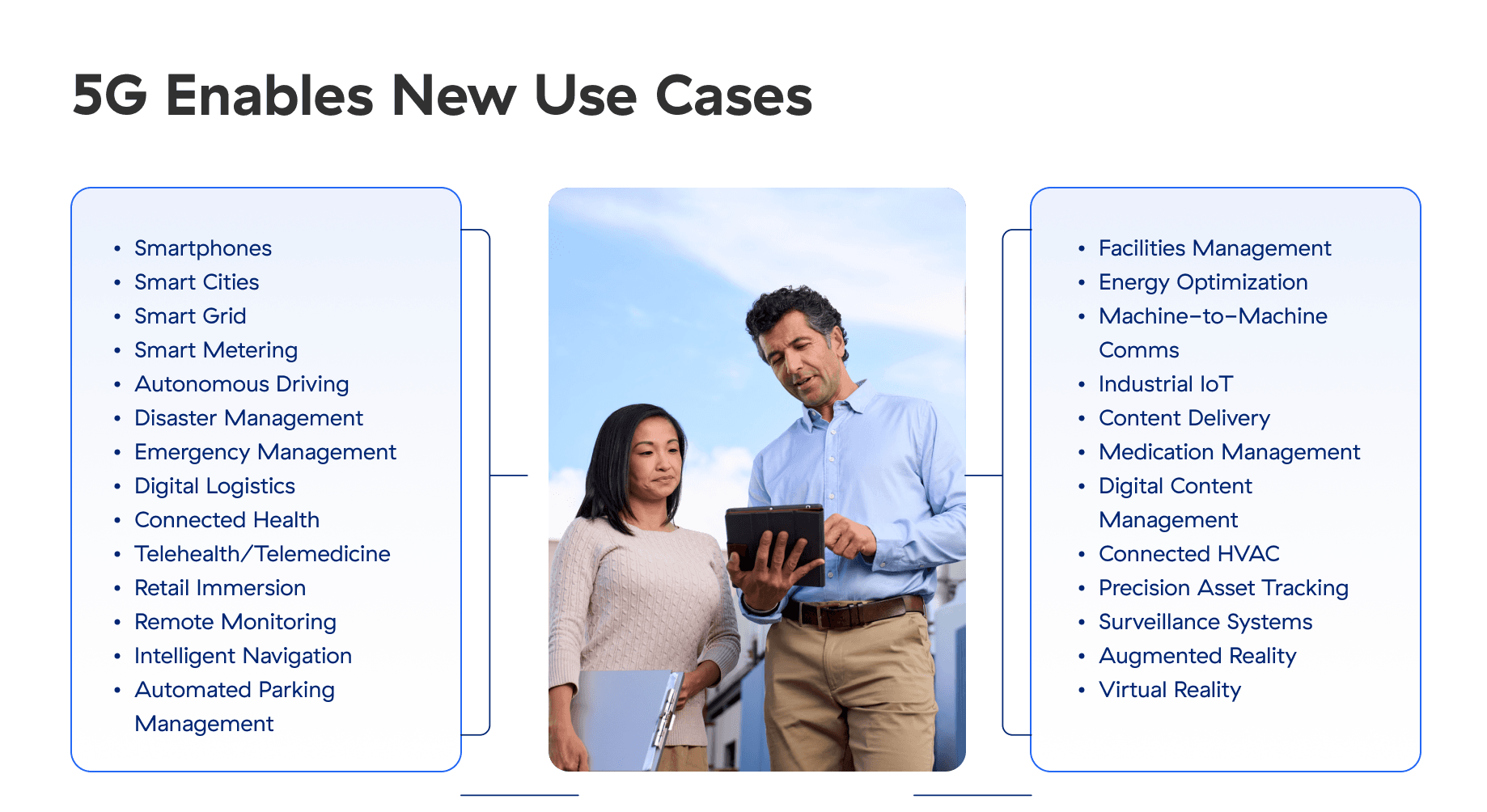

スマート マニュファクチャリング(インダストリー4.0)の本格化に伴い、IoTデバイスを活用する企業は、デバイスの接続やパフォーマンス維持のために、5Gネットワークの活用に意欲的になっています。

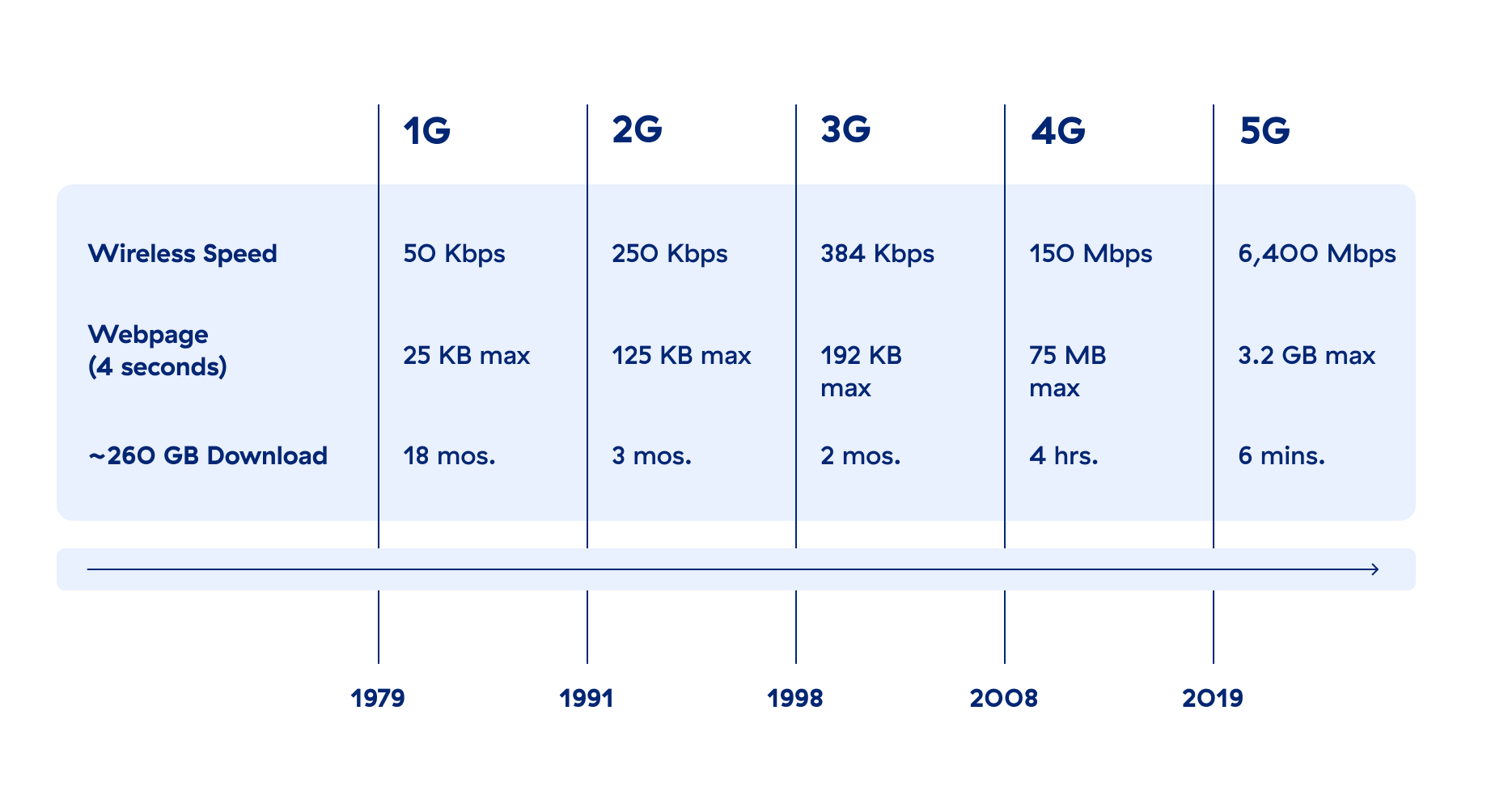

5Gの仕組み

5Gは、幅広い周波数帯を組み合わせて使用することで、通信速度を最大10 Gbps (4G-LTEの10〜100倍)まで引き上げます。現在は「十分なスピード」のWebエクスペリエンスを、ダイヤルアップ接続のようなスピードに感じるようになる日も近いでしょう。5Gは超低レイテンシーの通信を実現するため、ネットワークのパフォーマンスはほぼリアルタイムと言えるまでになります。あるデータ パケットをノートパソコンやスマートフォンから送信してワークロードに到達するまでに20〜1,000ミリ秒かかっていた場合、ユース ケース上必要であれば、数ミリ秒まで短縮できます。

もちろん、通信速度に影響する要因はそれだけではありません。物理的なスピードだけでレイテンシーを抑えることはできないのです。距離、帯域幅の輻輳、ソフトウェアや処理の不具合、さらには物理的な障害など、さまざまな要因がレイテンシーの増加につながる可能性があります。

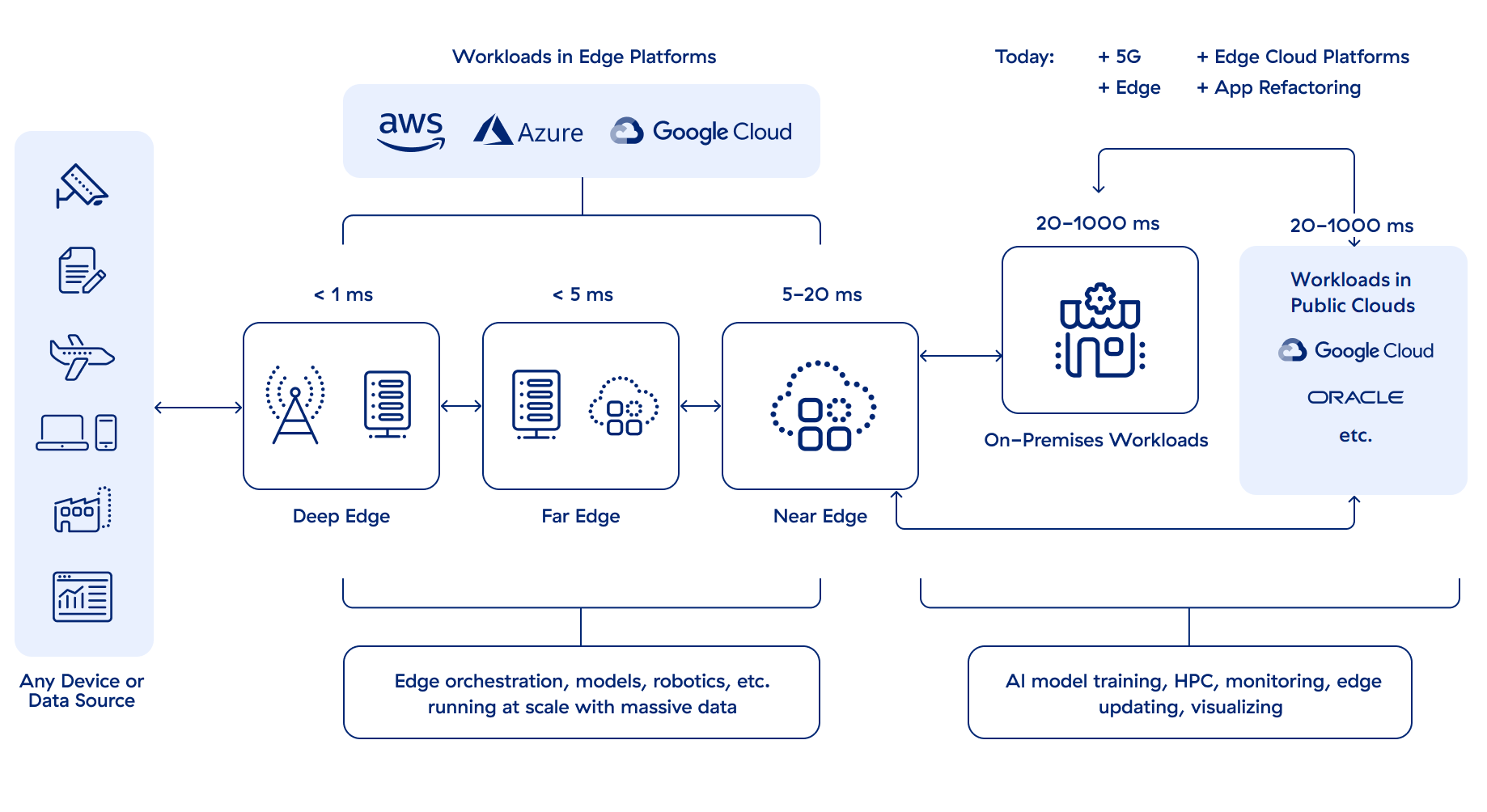

超低レイテンシーを実現するために、コンピューティング リソースに関して最も重要なことは、エンド ユーザーのデバイスに近い場所に配置することです。サーバーをエンドユーザーのデバイスから物理的に近い場所に配置することを、「エッジ コンピューティング」と呼びます。エッジ コンピューティングは、レイテンシーの範囲に応じていくつかのタイプに分かれます。。

- ファー エッジ:レイテンシーが5〜20ミリ秒のもの。クラウドからは比較的遠く、デバイスには比較的近い場所でコンピューティングを行う。

- ニア エッジ:レイテンシーが20ミリ秒を超えるもの。デバイスよりもクラウドに近い場所でコンピューティングを行う。

- ディープ エッジ:レイテンシーが5ミリ秒未満となる場所でコンピューティングを行うもの。

基本的な5Gネットワークの全体像を示した図

必要なエッジ コンピューティングのレベルは、ユース ケース、レイテンシーに関する要件、予算といった要素すべてに左右されます。必ずしもすべてのケースでリアルタイムに近いパフォーマンスが必要とされるわけではありませんが、多くの場合「そこそこ」リアルタイムに近いパフォーマンスが求められ、レイテンシーは20ミリ秒程度に抑える必要があります。

5Gはまた、多数のデバイスの接続を念頭に、非常に拡張性に優れた設計になっています。進化したこの無線接続ネットワークは、4G LTEに比べ、単位面積あたりで1,000倍の帯域幅と100倍の接続デバイスをサポートします。消費者向けモバイル デバイス 、企業向けデバイス、スマート センサー、自律運転車、ドローンなどがすべて、サービス レベルの低下を招くことなく同一の5Gネットワークを共有できます。

5Gと4Gの違い

4Gと5Gはどちらもモバイル デバイス向けの高速無線通信技術です。

技術的な性能について言えば、データ レートは4Gで最大約100 Mbps、5Gで最大20 Gbpsです。デバイス間での通信のレイテンシーは、4Gで約60〜100ミリ秒です。5Gのレイテンシーはこれを大幅に下回り、5ミリ未満に抑えられる可能性もあります。また、帯域幅や接続ボリュームについては、1平方キロメートルあたりでサポートできるデバイスの数が4Gでは数千台、5Gでは100万台となります。

しかし、5Gではインフラストラクチャーがより複雑化するため、攻撃対象領域の拡大にもつながります。また、4Gに比べはるかに多くの接続デバイスをサポートし、格段に高速なデータ転送を可能にするため、サイバー攻撃に対する脆弱性は高まります。さらに、仮想化やソフトウェア定義のネットワークなどの新たなテクノロジーを5Gネットワーク内で使用することで新たな脆弱性が生まれ、脅威アクターに悪用される恐れがあります。

最後に、5Gと4Gの決定的な違いの中でも特に重要なのが、5Gが機能するのはネットワーク エッジであり、4Gが依存する従来のインフラストラクチャーではないということです。これはすなわち、従来のネットワーク インフラストラクチャーでは効果的なセキュリティを提供することができず、代わりにネットワーク エッジでもセキュリティを提供する必要があるということです。

次は、エッジ コンピューティングやセキュリティと5Gの関係について見ていきましょう。

5Gとエッジ コンピューティングの関係

5Gテクノロジーとエッジ コンピューティング(ネットワークのエッジでのデータ処理とストレージを可能にする分散コンピューティング)によって、コミュニケーションやビジネスの方法が変わりつつあります。スピード、リアルタイムのデータ伝送、モバイル ネットワーク密度の進化は、現代の通信を支えており、IoT接続デバイス、自動化、VRや拡張現実インターフェイス、スマートシティーなどに不可欠なキャパシティーを実現します。

実際、エッジ コンピューティングが効果的に機能するために必要な高速通信や低レイテンシーは、5Gによって実現されています。また、データはほぼ瞬時に送受信できるため、リアルタイムの通信や分析が可能になり、スピードが重要になるサービスや、即時応答が求められるその他のアプリケーション(インダストリー4.0の機能など)で活用できます。

5Gネットワークの設計には、他にも多くの要素がありますが、低レイテンシーを実現するには、デバイスとの物理的な近接性が重要となります。したがって、コア ネットワーク インフラストラクチャー、アプリケーション サーバー、そして何より重要なセキュリティ インフラストラクチャーを、集中型のデータ センターからネットワーク エッジに移行し、ユーザーに近づける必要があります。そこで、検討する必要があるのがゼロトラストです。

5Gセキュリティの課題

5Gの標準では、セキュリティが重要な設計原理であることが明確に示されています。一般的にはこれは「5Gは安全な仕様である」と解釈できます。つまり、5Gはセキュリティを念頭に設計されているということです。

しかし実際には、5Gのセキュリティの範囲はネットワークそのものに限定されています。顧客が5Gネットワークを介した通信に使用するデバイスやワークロードはカバーされません。。

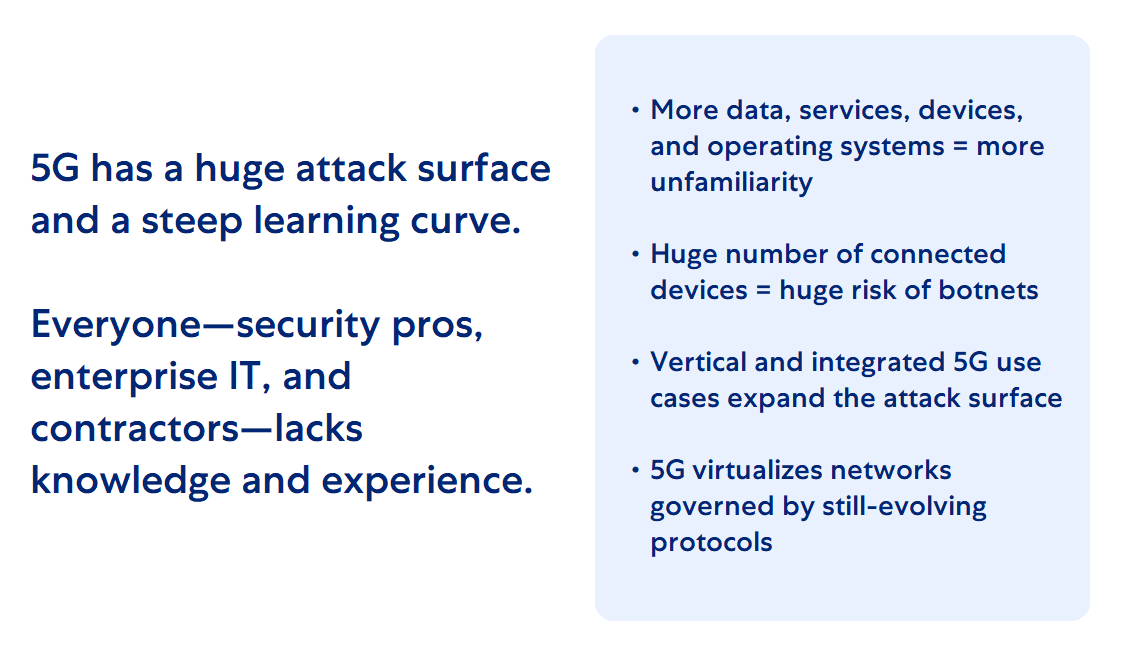

結果的に、5Gによってデータ、サービス、デバイス、オペレーティング システム、仮想化、クラウド利用が増加するのと同時に、非常に大きな攻撃対象領域が生まれ、エッジのワークロードは新たに多くの攻撃者にとって格好の標的となっています。

5Gセキュリティはそう簡単に習熟できるものではないため、多くのセキュリティ専門家、企業のIT部門、再委託業者は、5Gネットワークで実行されるアプリケーションの保護に必要な知識や経験を十分に持ち合わせていません。大きく拡大したITシステムを無線通信ネットワークに統合するにあたり、5Gでは、両方の分野に精通したサイバーセキュリティの専門家が必要です。

5Gに最大限のセキュリティ効果をもたらすゼロトラスト

ゼロトラストは、5G/エッジのワークロードとデバイスの保護を簡素化するため、これを導入することで、5Gの導入とそれに伴うセキュリティ上の課題への対応を、より簡単に、より迅速に、より安全に行えます。

ゼロトラストは、動的な脅威環境における総合的なセキュリティ モニタリング、きめ細かなリスクベースのアクセス制御、インフラストラクチャー全体にわたる体系的なシステム セキュリティ オートメーション、重要なデータ資産のリアルタイム保護のためのアーキテクチャーです。

Zscalerのソリューション:ゼロトラストのセキュリティ モデルの実装

Zscalerのプライベート5G向けゼロトラスト アーキテクチャーは、集中型5Gコアへのプライベート5Gの展開を保護および簡素化することで、プロバイダーを支援します。

- ゼロトラスト接続の実現:ルーティング可能なネットワーク(5G UPFからコア)を使用せず、インターネット経由のサイト間接続を保護します。また、ユーザーとデバイス(UE)を、MEC、DC、クラウド内のアプリと同じルーティング可能なネットワーク上に置かないよう徹底します。

- アプリとデータの保護:エグレスとイングレスの攻撃対象領域を最小化するとともに、ワークロードの脆弱性、設定ミス、過剰なアクセス許可を特定します。

- 通信の保護:インラインのコンテンツ検査で侵害やデータ流出を防止します。

- デジタル エクスペリエンスの管理:デバイス、ネットワーク、アプリのパフォーマンスを可視化することで、パフォーマンスの問題を迅速に解決します。

Zscaler Private Access App Connector、Branch Connector、Cloud Connectorは、Zscaler Client Connectorと共に、現在の5Gネットワーク上にあるお客様のデバイスとワークロードを保護します。2023年中ごろには、5Gコア テクノロジーの侵害防止を念頭に設計されたBranch Connectorの新たなバージョンがリリースされました。2024年にはさらなる機能強化が予定されています。